“Who in here has trust in AI” remarked Scott Zoldi, Chief Analytics Officer at FICO. The response was deafening from the London Blockchain Conference crowd.

That’s because it is becoming increasingly hard to place trust in an emerging technology that is constantly evolving and advancing to unknown heights.

And this trust isn’t just misplaced by the general public either. Financial institutions, tech companies and a wide range of public and private sector businesses, whilst initially opening their arms to AI, are finding it difficult to contain its rapid ascension.

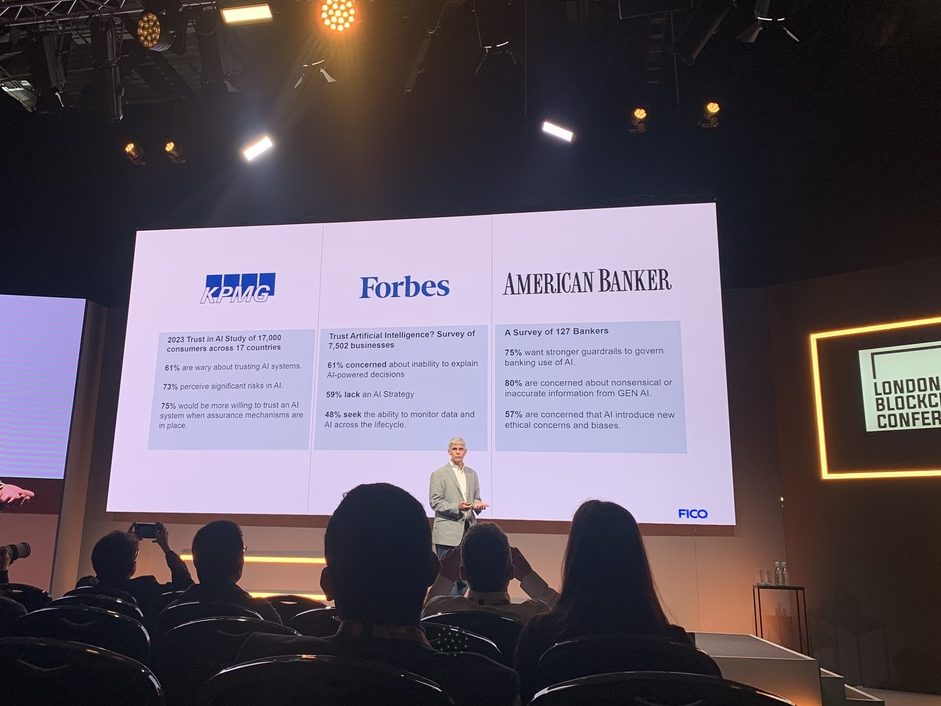

According to the presentation on the screen, American Banker found that 75% of bankers want stronger AI guardrails. 61% of businesses are concerned about the ambiguity of explaining AI functions according to Forbes, whilst KPMG revealed that 75% of consumers would be more willing to trust AI systems once assured mechanisms are in place.

Zoldi also explained that regulators are now viewing AI as “high-risk”, with him also revealing that the US has viewed some of these AI models as “wide-scale discrimination” propagated by these models.

Responsible AI

So from the jump it appears that AI has a trust problem and Zoldi, who has been working extensively on AI models for FICO over the past eight years, admits that as well.

What the Chief Data Analyst has found during his time working on these various models was that a term or phrase was needed to describe best practices for AI usage, using a guide to philosophise the safety and security of the technology moving forward; enter Responsible AI.

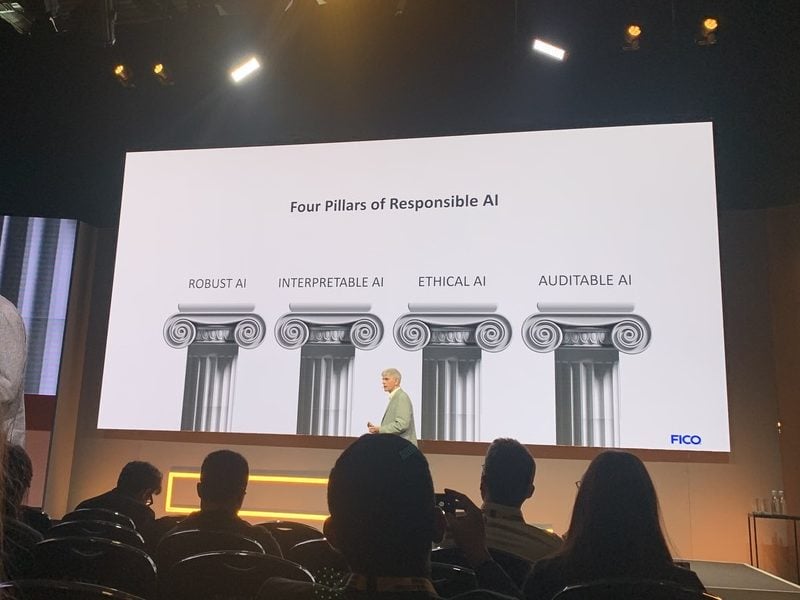

“Responsible AI is built on a set of pillars that establish that you have taken the time and effort to develop the best models of programming,” remarked Zoldi.

“Responsible AI is how regulation can be met, and is also how we can establish trust. But we do not have a set of standards as an industry to use for Responsible AI.”

Zoldi built his view on Responsible AI into four key pillars; robust AI, interpretable AI, ethical AI and auditable AI.

Robust AI outlines the severity of building a model that needs the programmers full and undivided attention, collecting data and building relationships that can be learned, that can take up to months and even upwards of a year to finalise.

Interpretable AI exposes the relationship once the algorithm has been encoded so a human being can understand what exactly is being learned to justify its use. Ethical AI then comes swiftly after, the need to make sure that the data collated for the algorithm is unbiased as it can be.

Auditable AI is almost the de facto definition of Responsible AI. According to Zoldi, he explains that it is “providing that source of truth, a focus on how I can govern, I can track whether or not the model is built properly and did I make the right decisions”.

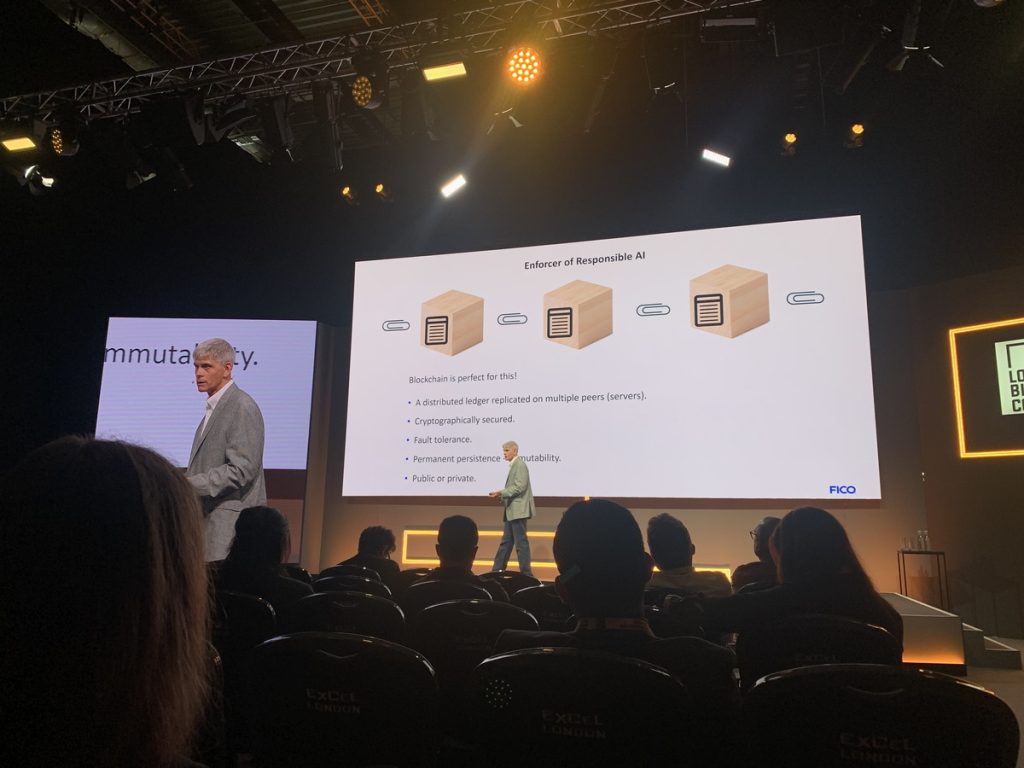

Blockchain has a role to play in Responsible AI

Where does blockchain come into this? The technology has also had its fair share of doubters in the past and even more so currently.

Blockchain at its core is a data storing system that can hold substantial amounts of data and is easily accessible. The technology can play an integral role in the underlying data accumulated for various AI models to speed up the process whilst also being secure.

Zoldi described blockchain as the ‘killer app’ for AI development in building private and public networks, opening them up to further transparency and coming together to form honourable AI.

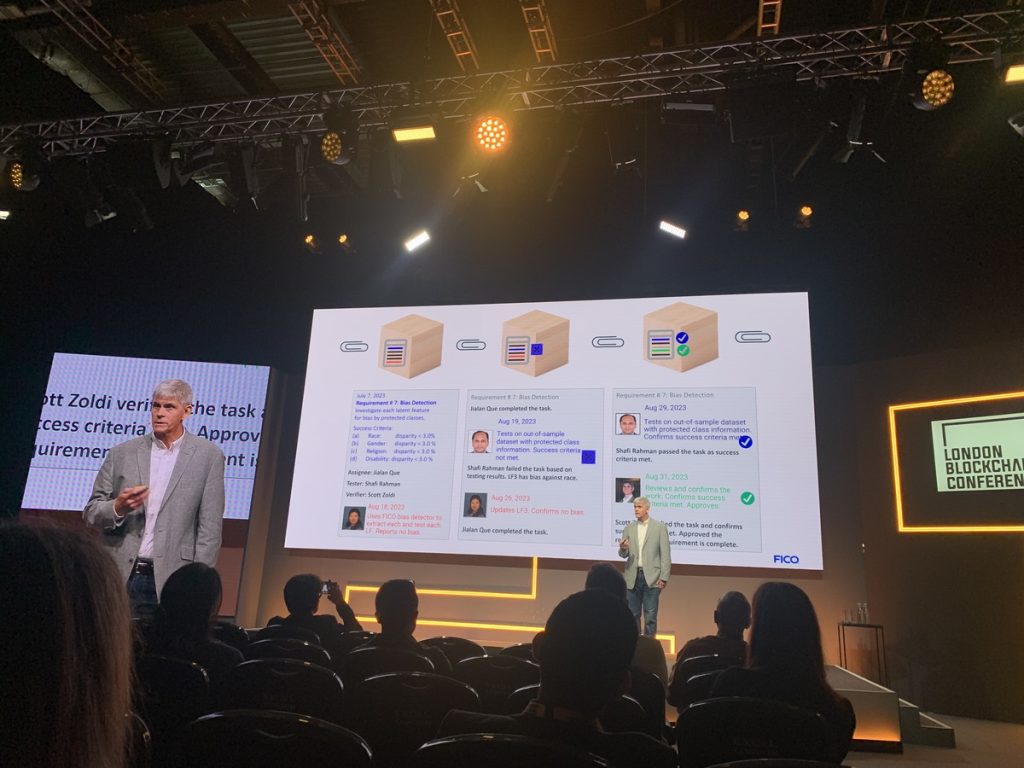

But there were three significant roles he stressed that are critical for the establishment of a blockchain-based AI model, the first being an ‘assignee’. This role entails the data scientist that builds and sources all encompassing data and is their responsibility.

The second role, the ‘tester’, is under the same level of scrutiny. They need to assess and independently verify the success criteria upon completion and if it aligns with the initial objectives.

Lastly is the ‘reviewer’, the final check-off point before the AI model can then be moved onto its initial pilot phase. The blockchain network assigned is able to collate all the data and timelines to enable each role to go back and analyse if and where a mistake was made, speeding up the process.

How long before we fully trust AI? If ever?

People within the crowd of the London Blockchain Conference panel may or may not have warmed up to Responsible AI, but it is encouraging that there are methods being built in conjunction with the erratic nature of how AI is evolving.

There are also concerted efforts being made by global policymakers. The UK, the US, China and a whole host of other countries entered into the Bletchley Pact last November, which encourages collaboration on best practices for AI’s regulation.

The UK and US even established their own pact to work on how this emerging technology will safeguard its consumers.

Zoldi, even after outlining methods for Responsible AI, admitted that it would be foolish for you to completely trust the progression of AI today. However,it is worth noting that developers, programmers and policymakers are acting now before it can completely take over.